contents

• What is a technology?

• Top 20 technology developed future.

• What is a artificial intelligence and type?

• Artificial intelligence use tools and applications.

• How does work artificial intelligence?

• 12 examples for a artificial intelligence.

• How to use artificial intelligence in our daily life?

• Top 9 highest paid artificial intelligence company.

[A]. WHAT IS EXPLAINABLE AI?

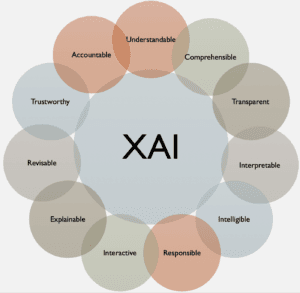

Reasonable man-made consciousness (XAI) is a bunch of cycles and techniques that permit human clients to appreciate and believe the outcomes and results made by AI calculations. Reasonable AI is utilized to portray an AI model, its normal effect and possible predispositions. It describes model exactness, reasonableness, straightforwardness and results in AI-fueled independent direction. Reasonable AI is urgent for an association in incorporating trust and certainty while putting AI models into creation. Artificial intelligence logic likewise assists an association with embracing a capable way to deal with AI advancement.

[B]. WHY DOES EXPLAINABLE AI MATTER?

It is critical for an association to have a full comprehension of the AI dynamic cycles with model observing and responsibility of AI and not to trust them aimlessly. Reasonable AI can help people comprehend and make sense of AI (ML) calculations, profound learning and brain organizations.

ML models are frequently considered secret elements that are difficult to interpret.² Neural organizations utilized in profound learning are probably the hardest for a human to comprehend. Inclination, regularly founded on race, orientation, age or area, has been a well-established risk in preparing AI models. Further, AI model execution can float or debase because the creation of information varies from preparing information. This makes it significant for a business to persistently screen and oversees models that advance AI logic while estimating the business effect of utilizing such calculations. Reasonable AI likewise advances end client trust, model suitability and useful utilization of AI. It likewise mitigates consistency, lawful, security and reputational dangers of the creation of AI.

[C]. INSIDE THE BLACK BOX: 5 METHODS FOR EXPLAINABLE ARTIFICIAL INTELLIGENCE (XAI)

(1). Layer-wise relevance propagation(LRP)

(2). Counterfactual method.

(3). Local interpretable model-agnostic

explanations (LIME)

(4). Generalized additive model (GAM)

(5). Rationalization.

[D]. Here are some utilization situations where logical AI can be utilized:

(1). Medical services: When diagnosing patients with the infection, reasonable AI can make sense of their findings. It can assist specialists with making sense of their conclusion for patients and making sense of how a treatment plan will help. This will assist with making more prominent trust among patients and their PCPs while relieving any likely moral issues. One of the models where AI forecasts can make sense of their choices could include diagnosing patients with pneumonia. Another model where logical AI can be very helpful is in medical services with clinical imaging information for diagnosing malignant growth.

(2). Producing: Explainable AI could be utilized to make sense of why a mechanical production system isn’t working as expected and how it needs to change after some time. This is significant for further developed machine-to-machine correspondence and understanding, which will help make more noteworthy situational mindfulness among people and machines.

(3). Guard: Explainable AI can be helpful for military preparation applications to make sense of the thinking behind a choice made by a man-made consciousness framework (i.e., independent vehicles). This is significant because it mitigates potential moral difficulties, for example, why it misidentified an article or didn’t fire on an objective.

(4). Independent vehicles: Explainable AI is turning out to be progressively significant in the car business because of profoundly broadcasted occasions including mishaps brought about via independent vehicles (like Uber’s deadly accident with a walker). This has put an accentuation on logic methods for AI calculations, particularly with regards to utilizing cases that include security basic choices. Logical AI can be utilized for independent vehicles where reasonableness gives expanded situational mindfulness in mishaps or startling circumstances, which could prompt more capable innovation activity (i.e., forestalling crashes).

(5). Credit endorsements: reasonable man-made brainpower can be utilized to make sense of why an advance was supported or denied. This is significant because it mitigates any expected moral difficulties by giving an expanded degree of understanding among people and machines, which will assist with making more noteworthy confidence in AI frameworks.

(6). Continue screening: logical man-made reasoning could be utilized to make sense of why a resume was chosen or not. This gives an expanded degree of understanding among people and machines, which makes more noteworthy confidence in AI frameworks while alleviating issues connected with inclination and shamefulness.

(7). Misrepresentation identification: Explainable AI is significant for extortion location in monetary administrations. This can be utilized to make sense of why an exchange was hailed as dubious or genuine, which mitigates potential moral difficulties related to uncalled for predisposition and segregation issues about recognizing fake exchanges.